Learning Resources

On this page, you can find the learning resources that we created as part of the DataLitMT project. These learning resources cover different aspects of MT-specific data literacy as described in the DataLitMT Project Competence Matrix. Based on the difficulty of these learning resources, we classified them as either Basic Level or Advanced Level resources, as defined in the competence matrix. The learning resources are provided in different formats (theoretical overviews, papers, Jupyter notebooks or videos) and some resources include a combination of formats.

Note 1: If you click on one of the Jupyter notebook links below, you will be directed to the online version of this notebook hosted in a Google Colab environment. You can run the notebook directly in this Colab environment, provided you have a Google account. You can also download the notebooks from Colab (no Google account required) or from our GitHub Repository and run them on top of a local Python installation or in another online environment such as Kaggle. However, we recommend that you run the notebooks in Google Colab as they were set up specifically to work in a Colab environment. If you run them in another environment, you may have to install or update several libraries which are already provided in Colab. In some notebooks, we also work with external files and access these files through Google Drive. Handling these external files will also be more complex if you run the notebooks outside of Colab.

If you run the notebooks in Google Colab, a copy of the notebooks will be saved in your Google Drive folder. These copies will become your individual notebook versions; any changes you make to these notebooks will be saved. Feel free to edit the notebooks as you see fit to adapt them to your individual learning or teaching requirements. (Note: If you want to share these adapted notebooks, you have to provide them under the same CC BY-SA 4.0 license as the original notebooks.) If you are unhappy with the notebook changes, you can simply open the notebook links provided on this page again and you will be referred to a copy of the original version. If you run the first code cell in one of the Colab notebooks linked here, you will be notified that the notebook has been created by one of our team members' gmail accounts and you will be asked whether you wish to work with the notebook anyways. You can safely confirm this and continue to work with the notebooks. You can work on one Google Colab notebook at a time (and also select one GPU as a hardware processor, as explained in the relevant learning resources). You can alternatively upgrade to a paid Colab subscription (e. g. Colab Pro) and work on multiple notebooks at a time.

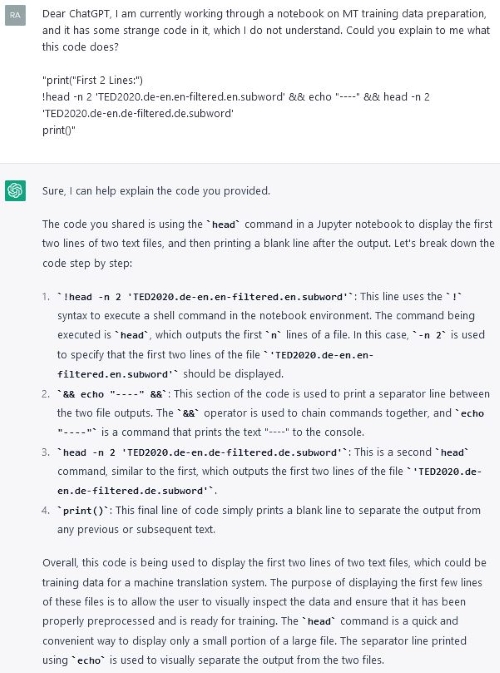

Note 2: The Jupyter notebooks provided as DataLitMT learning resources contain a lot of pre-written Python code cells, which you can simply run to execute the functions defined by this Python code. In the tutorial videos, we explain only some general aspects of this Python code. If you would like to know in detail what the individual code cells do, feel free to paste the code into OpenAI's ChatGPT and have the language model explain the code to you. Below, you can see an example of how this would work:

A Brief Introduction to Python Programming

As many of these resources are created in an online version of a Jupyter notebook hosted in a Google Colab environment, we have compiled some introductory concepts of Python programming (in a Google Colab environment) in this notebook. Here, we cover basic concepts such as strings and lists as well as some more advanced concepts that will be useful for many of our learning resources. Particularly if you have not yet programmed (much) yourself, this resource might be a useful starting point before diving into the more complex resources (in the form of notebooks) referred to below.

Data Context for MT: Conceptual Overview & Resources

Basic Level

In this GitHub folder, you can find a short paper establishing a data context for machine translation. The paper provides a general conceptual overview and refers you to several resources outside of DataLitMT which are relevant to a comprehensive (MT-specific) data literacy education.

Data Ethics and MT

Basic Level

In this GitHub folder, you can find a paper discussing data ethics and MT.

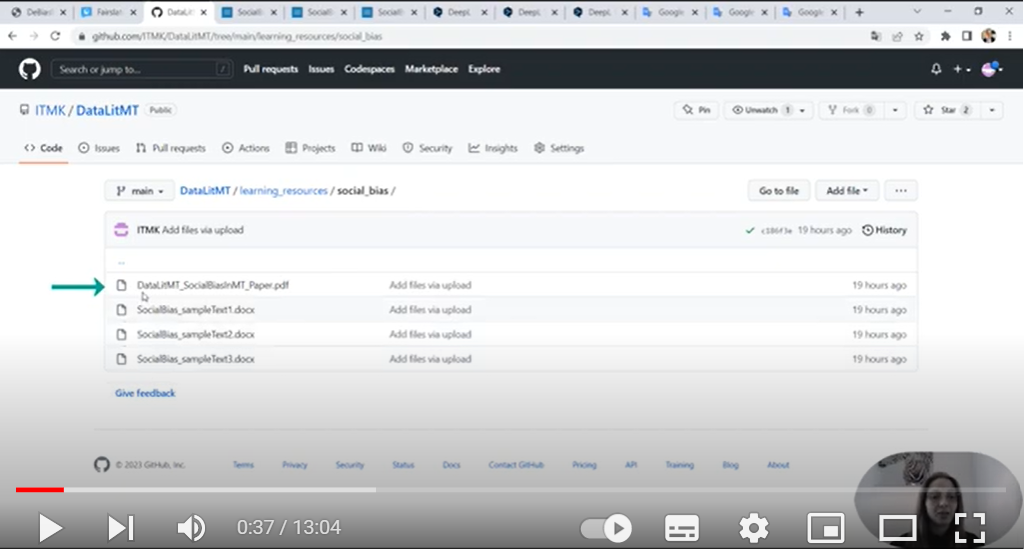

Social Bias in MT

Basic Level

In this GitHub folder, you can find a paper discussing issues of social bias in MT, focusing specifically on gender bias. In this tutorial video, we illustrate a range of linguistic triggers which lead MT systems such as Google and DeepL to produce a gender-biased translation, and we present the bias-aware MT system Fairslator and the DeBiasByUs platform for collecting instances of gender bias identified in MT systems.

You can also watch the tutorial video by clicking the video below:

Advanced Level

Coming soon: An advanced companion resource on the topic of gender bias in MT.

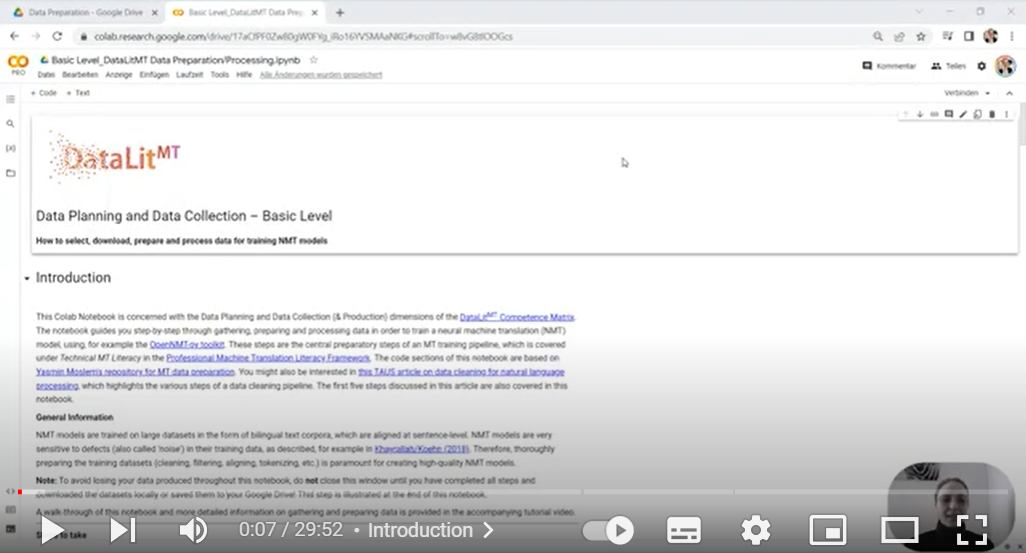

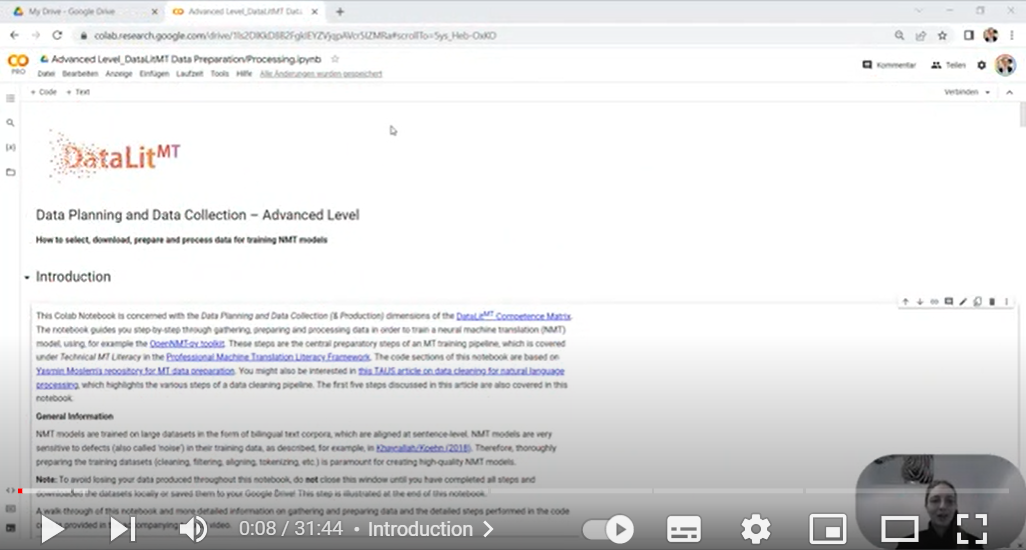

MT Training Data Preparation

This learning resource is available at Basic and Advanced Levels. It guides you through the process of preparing data for NMT training by filtering, cleaning, tokenizing and splitting a particular dataset.

Basic Level

Click on this link to access the Colab notebook on MT training data preparation at the Basic Level. You can watch the tutorial video complementing this notebook by clicking the video below:

Advanced Level

Click on this link to access the Colab notebook on MT training data preparation at the Advanced Level. You can watch the tutorial video complementing this notebook by clicking the video below:

Training an NMT Model

Advanced Level

In this Advanced Level resource, you will learn how to train your own NMT model from scratch (optionally, using the data you have prepared in the learning resource on MT training data preparation) and evaluate the quality of the translations produced by this model. Click on this link to access the Colab notebook on MT model training. You can watch the tutorial video complementing this notebook by clicking the video below:

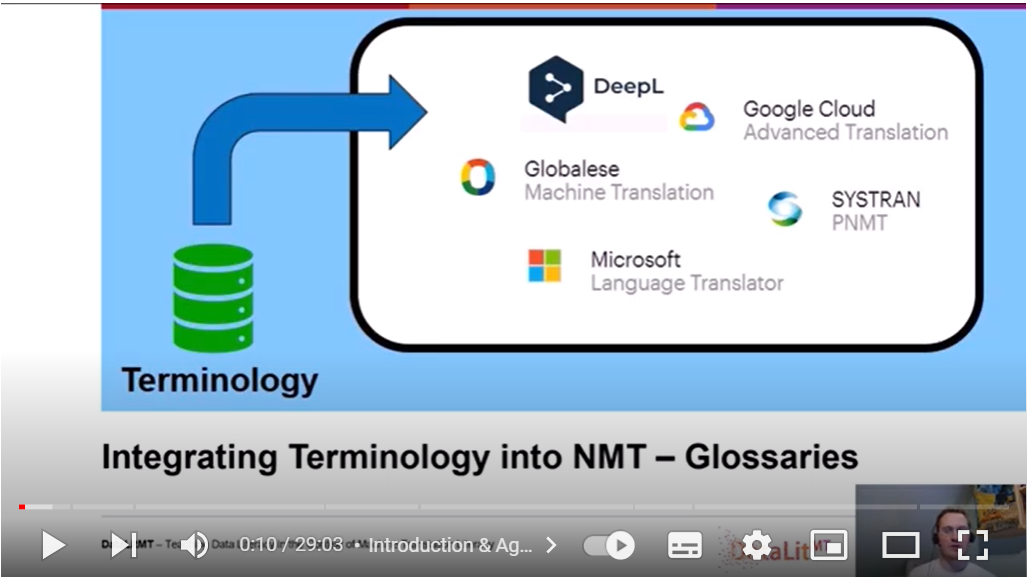

Terminology Integration into MT Models

Basic Level

In this Basic Level resource, you will learn how terminology data can be integrated into NMT to ensure consistent use of terminology in the output. The paper, which you can find in this GitHub folder, will provide you with introductory information and a general overview of the topic. In addition, the video below will introduce you to the glossary feature of DeepL. This feature can be used to enforce desired target terms in NMT output. You can find the companion PDF (for the video) in this GitHub folder.

You can also have a look at this short and comprehensive video by DeepL explaining their glossary feature.

Advanced Level

In this Advanced Level resource, you will learn how to prepare data with a focus on terminology preparation (using TM2TB, Excel, and a glossary converter). In this GitHub folder, you can find a translation memory if you would like to work through this resource yourself. Click on this link to access the Colab notebook on the TM2TB aspect of terminology preparation. You can watch the tutorial video on terminology extraction and cleaning training data by clicking the video below:

Automatic MT Quality Evaluation

This learning resource is available at Basic and Advanced Levels. It guides you through evaluating the quality of machine-translated texts by calculating a range of automatic evaluation metrics. We cover two string matching-based metrics (BLEU and TER) and two embedding-based metrics (BERTScore and COMET). You will learn how these metrics work and how to calculate them at sentence and document levels. The Advanced Level resource discusses in much more detail how the individual scores are actually calculated.

Note that there is a discrepancy between the COMET scores shown in the tutorial videos below and the COMET scores calculated in the current version of the notebooks (respectively linked below). This is because COMET has been updated in the meantime and the previous models used in the videos can no longer be accessed. It is to be expected that the models used in the current notebooks will also become deprecated at some point in the future. Status updates on COMET models can be found here.

Basic Level

Click on this link to access the Colab notebook on automatic MT quality evaluation at the Basic Level. You can watch the tutorial video complementing this notebook by clicking the video below:

Advanced Level

Click on this link to access the Colab notebook on automatic MT quality evaluation at the Advanced Level. You can watch the tutorial video complementing this notebook by clicking the video below:

Companion Notebooks

We also prepared three companion notebooks complementing the ‘main’ notebooks on automatic MT quality evaluation at Basic and Advanced Levels. The first two notebooks provide more detailed background information on string matching- and embedding-based MT quality metrics, and the third notebook allows you to automatically evaluate MT quality at document level.

String Matching-based Metrics

Click on the image below to access the companion Colab notebook on string matching-based metrics.

Embedding-based Metrics

Click on the image below to access the companion Colab notebook on embedding-based metrics.

Evaluation at Document Level

Click on this link to access the companion Colab notebook on automatic MT quality evaluation at document level. You can watch the tutorial video complementing this notebook by clicking the video below:

Pre- and Post-Editing

Basic Level

In this GitHub folder, you can find a paper on the topic of pre- and post-editing as well as the files that we refer to in the paper and work with in our notebook. Click on this link to access the notebook on Data Manipulation in the Context of Machine Translation: Pre- and Post-Editing at the Basic Level.

Machine Translationese & Post-Editese

In this GitHub folder, you can find a paper on the topic of machine translationese & post-editese that can be read at both Basic and Advanced Levels. In this folder, you can also find the files that we work with in our Basic and Advanced Level resources on this topic.

Basic Level

You can watch the tutorial video on analysing texts for instances of machine translationese and post-editese at Basic Level by clicking the video below:

Advanced Level

Click on this link to access the Colab notebook on analysing text for instances of machine translationese and post-editese at Advanced Level. You can watch the tutorial video complementing this notebook by clicking the video below: